AI Glossary for Software Engineers in 2026

Stop vibe coding and start engineering. Learn the essential AI glossary to build reliable systems with MCP, agents, and rigorous context management.

While 2024 was defined by developers intuitively prompting their way through basic tasks, 2026 is the year of rigorous AI engineering. As we move from simple chatbots to complex agentic systems, the distance between magic and mechanics is narrowing.

Software engineering has always been about managing abstractions and protocols. The transition to AI-integrated development is no different. To build reliable systems, we must move past the hype and master the technical foundation behind these models.

This post provides the essential terms for the modern AI engineering stack.

A shared language is the only way to move from brittle experimental projects to production-ready systems. Vibes are great, but it’s time to start engineering.

In this post, you’ll learn

The technical foundations of modern foundation models and their learning processes.

How to manage context and tokens to avoid reasoning degradation.

The protocols and architectures that allow AI agents to interact with external tools.

Modern development paradigms and verification strategies for agentic workflows.

Foundations

Foundation Models & LLMs

Foundation Models are the base layer of our new stack. These models are trained on massive, diverse datasets, allowing them to adapt to a wide range of tasks without needing to be built from scratch. Large Language Models (LLMs) are a specific type of foundation model that has been optimized for processing and generating human language, although modern versions are increasingly multi-modal, handling text, images, and audio seamlessly.

The Transformer Architecture & Attention

The Transformer Architecture is the core algorithmic discovery that made modern AI possible. By using a mechanism called Attention, these models can process all parts of an input sequence at once instead of reading word by word. This parallel processing allows the model to understand complex relationships and nuances in code or text that earlier techniques would miss entirely.

Training, Fine-tuning, and RLHF

Training is the process of building the model’s base intelligence. Pre-training establishes general knowledge across the internet and books. Fine-tuning is a post-training technique where you perform additional training on specific data, like your company’s codebase, to specialize the model. RLHF (Reinforcement Learning from Human Feedback) is then used to align the model’s responses with human intent and safety standards, ensuring the output is actually helpful for a developer.

Inference & Tokens

Inference is the act of running the model to generate a response. The cost and performance of inference are determined by Tokens, the basic units of data the model processes. Modern engineers must track Token Windows (the model’s memory limit, aka Context Window or Context Memory) and use Cache Tokens to minimize latency and cost by reusing frequently used prompt prefixes. Performance is boosted when inference is optimized, like OpenAI’s Codex 5.3 spark running on 1k tokens/second.

The Interface

Prompt Engineering & Prompt Libraries

Prompt Engineering is the systematic practice of crafting inputs to get the best possible response from a model. It involves more than just writing instructions; it includes managing Prompt Libraries, which are versioned, tested collections of prompts used across a team or application to ensure consistent and high-quality outputs.

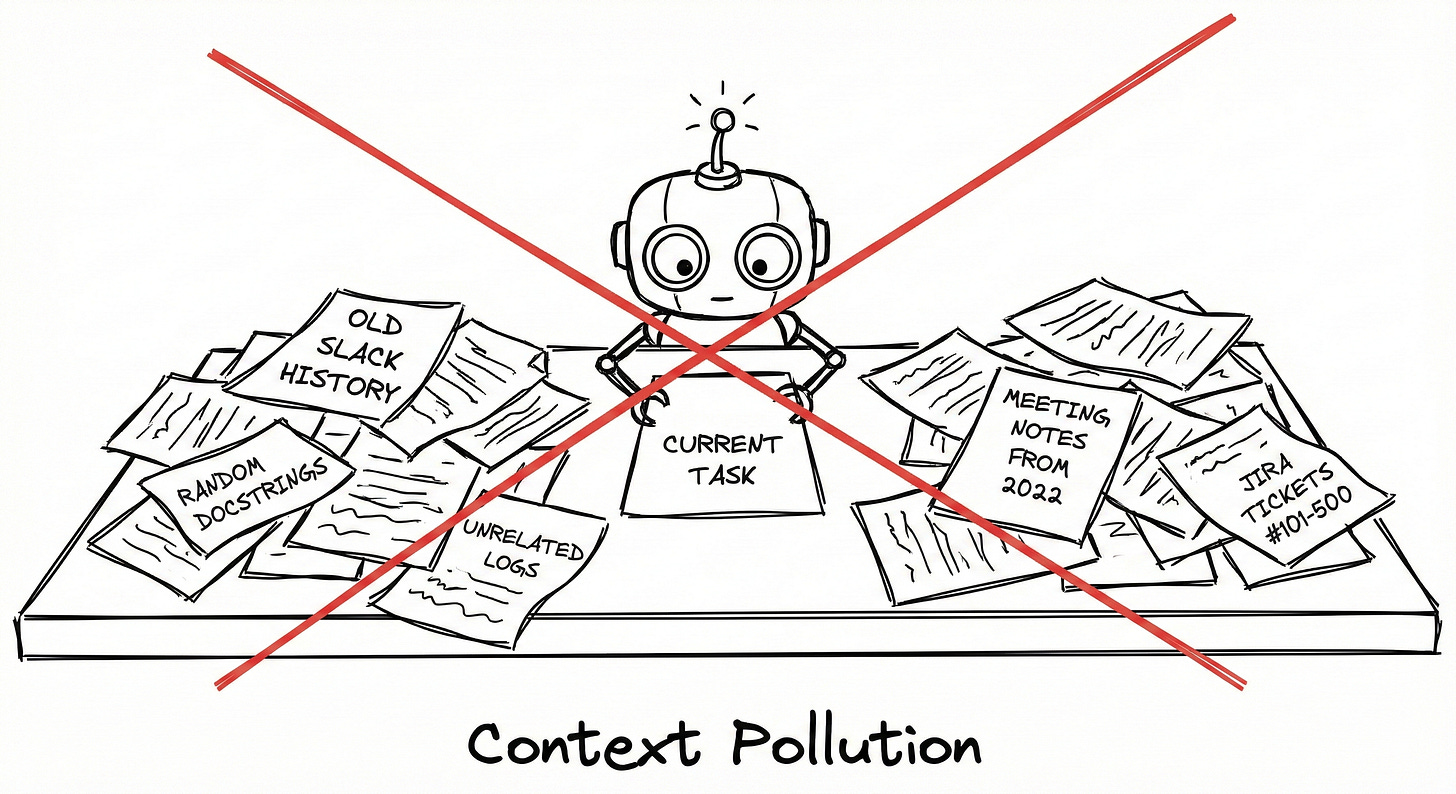

Static Context & Dynamic Context

Context management is the most important factor in model performance. Static Context refers to stable, long-lived information like your entire codebase or architectural standards. Dynamic Context is the temporary, task-specific information for the current interaction, such as current problem descriptions, intermediate code versions, and debugging information.

Multi-Turn Conversations

Multi-Turn Conversations involve multiple round trips between the human and the machine. This back-and-forth interaction is what enables agentic behavior, allowing the AI to maintain state, plan, and adapt dynamically to new information over the course of a session.

Hallucinations & Grounding

A Hallucination occurs when an AI model generates factually incorrect or fabricated information. To prevent this, engineers use Grounding, the process of providing the model with verifiable facts and context (via RAG or MCP) to ensure its responses are based on reality rather than its training weights alone.

The Action Layer

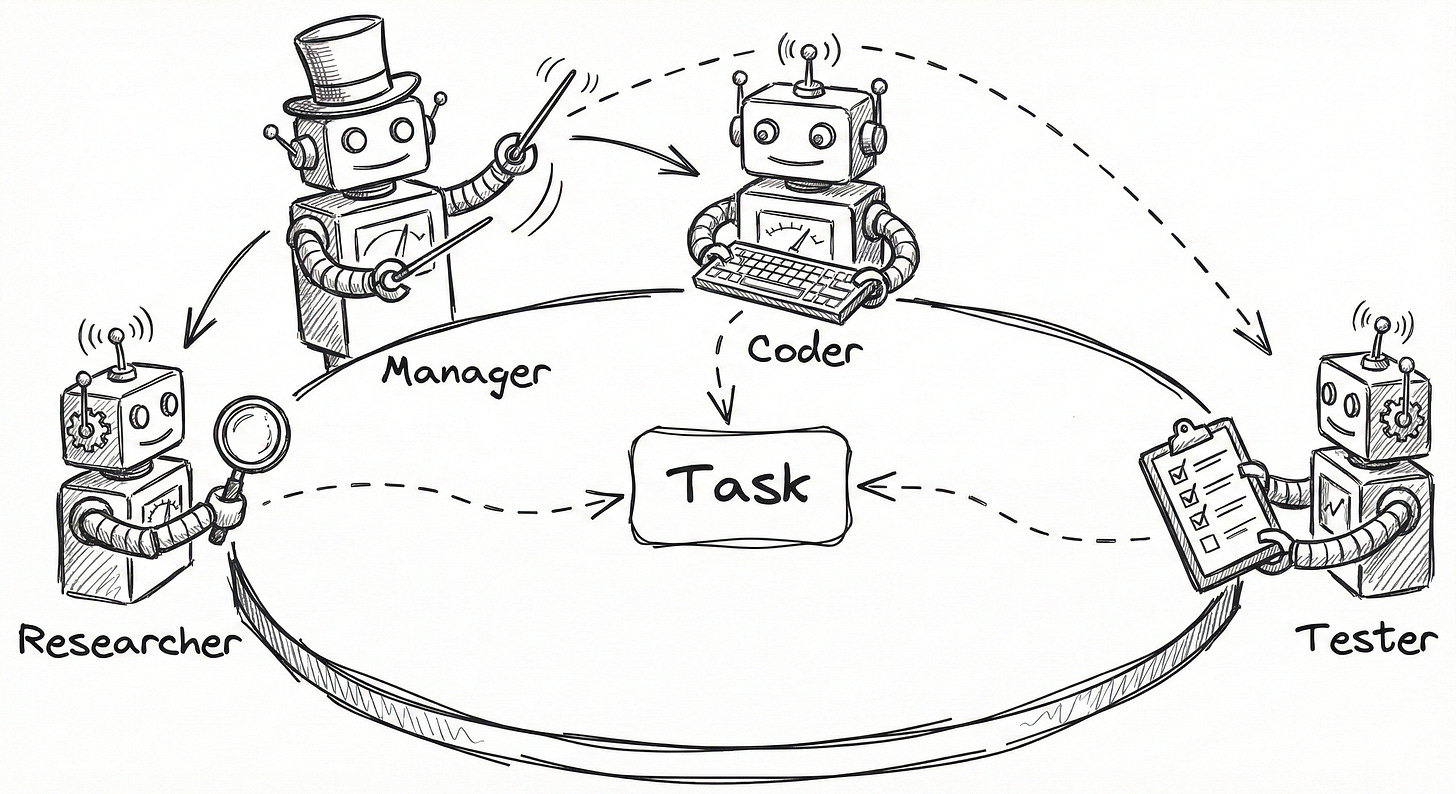

AI Agents & Agent Swarms

An AI Agent is a system designed to perform tasks autonomously by perceiving its environment and using tools. Unlike a simple chatbot, an agent maintains its own state and operates in a loop toward a specific goal. It’s like executing prompts in a loop, you only send the first one, and other outputs like the results of executing a shell command are the next prompts An Agent Swarm is a multi-agent system where specialized agents (e.g., a “Researcher” and a “Coder”) collaborate to solve complex problems.

Skills & Rules

To make agents reliable, we use Skills and Rules. A Skill is the knowledge and scripts that teaches an agent how to execute a specific task, like a security audit. They are loaded into the context memory only when needed. Rules are the constraints and guidelines, often defined in a project’s configuration, that the agent must strictly follow during execution.

Model Context Protocol (MCP)

The Model Context Protocol (MCP) is the open standard that connects AI agents to their tools and data. It defines three primary primitives: Tools (executable functions), Resources (read-only data like files), and Prompts (reusable templates). MCP decouples the AI model from the tools it uses, allowing for a standardized integration layer across different model providers. Well, that’s at least the initial MCP release, but there are many new things in the protocol (check next article for a closer look into MCP 😄)

JSON-RPC

JSON-RPC 2.0 is the silent, lightweight transport protocol powering the communication between MCP clients and servers. It provides a simple, stateless way for agents to send requests and receive structured responses from external tools.

Building with AI

Vibe Coding

Vibe Coding is a methodology where natural language conversation is the primary interface for code generation, execution, and debugging. The developer orchestrates the project through a dialogue with the AI rather than manual line-by-line typing. It’s been misused by people without a tech background to say software engineers will have no jobs, and often, those people release software with many security risks and flaws.

Spec-Driven Development

Spec-Driven Development is a rigorous form of AI-assisted coding where a high-level specification (like a DESIGN.md or REQUIREMENTS.md) acts as the absolute source of truth for the model’s generation. Personal take here, the idea is too strict and hard to manage. It’s harder to review markdown files, especially with the LLM’s tendency to only add stuff instead of removing it. However, it’s useful to treat it as a “plan mode”, so you review the plan before the AI writes any code.

Task Graphs & Leaf Nodes

Complexity is managed by breaking projects into Task Graphs, where work is represented as interconnected nodes of tasks. A Leaf Node is a small, independent task that can be handled by an AI in a single turn, allowing for a 10x acceleration in implementation speed.

V&V (Verification & Validation)

V&V (Verification & Validation) are the critical safety nets for agentic loops. Verification ensures the generated code meets technical requirements (like passing tests), while Validation ensures the code actually solves the user’s intended problem.

Evals

Evals are the unit tests of AI engineering. They are structured, automated benchmarks used to measure how well an AI system performs on specific tasks, such as correctness, safety, or tone. Evals allow engineers to quantify progress and catch regressions as models or prompts change.

RAG (Retrieval-Augmented Generation)

RAG (Retrieval-Augmented Generation) is a technique that enhances AI model responses by retrieving relevant information from a knowledge base at runtime. By giving the model an “open-book” exam with your proprietary code and documentation, RAG significantly reduces hallucinations and provides up-to-date context.

Conclusion

Mastering the terms of AI engineering is the first step toward building professional systems. You can’t build what you can’t name. In fact, you can’t prompt an AI to build what you can’t name.

If you understand how to manage context, tokens, and agentic loops, you are no longer just a user of AI tools. You’re definitely a power user. The best part is that being a power user doesn’t make you just 10% better than someone who isn’t, but much more.

You are now a builder in a world where tokens are a commodity. AI providers offer subscriptions with decent usage, and companies are putting more money into AI. The challenge is to take these AI concepts and apply them to your daily workflow. Now you’ve taken the first step to deliver your best work.

Let’s keep building!

If you found value in this post:

❤️ Click the heart to help others find it.

✉️ Subscribe to get the next one in your inbox.

💬 Leave a comment with your biggest takeaway

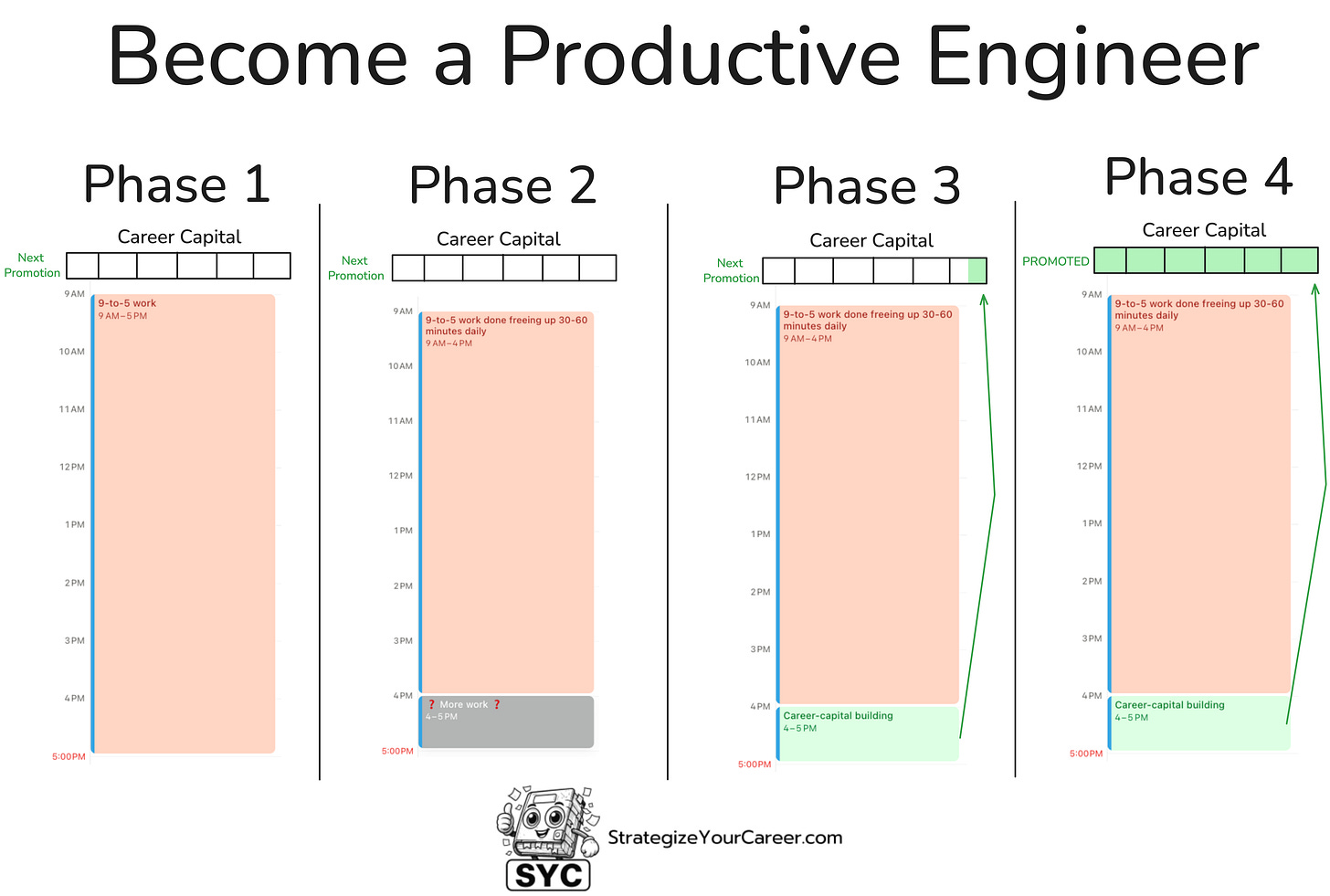

This is an article inside our system to move between phases 2 and 3. You’ll be able to build those XP points necessary for your next promotion if you master AI. It’s becoming an important criterion in promotions.

I’m building this system for paid subscribers. Thanks for your continued support!

🗞️ Other articles people like

👏 Weekly applause

Here are some articles I enjoyed from the past week

Learning Tracks: Become an Engineering Multiplier by Gregor Ojstersek. As AI tools handle more basic programming tasks, your organizational skills become your true competitive advantage.

Airbnb’s Move from Monolith by Saurabh Dashora. Moving from a monolith to microservices needs a strategy.

P.S. This may interest you:

Are you in doubt whether the paid version of the newsletter is for you? Discover the benefits here

Could you take one minute to answer a quick, anonymous survey to make me improve this newsletter? Take the survey here

Are you a brand looking to advertise to engaged engineers and leaders? Reach out here

Give a like ❤️ to this post if you found it useful, and share it with a friend to get referral rewards

The fancy images are likely AI-generated, the not-so-fancy ones by me :)